This paper tested the MLP, CNN, and RNN models separately through five tasks. The heart of the machine not only introduced the experiment, but also used the Keras (TensorFlow backend) to test the CNN on the MNIST dataset.

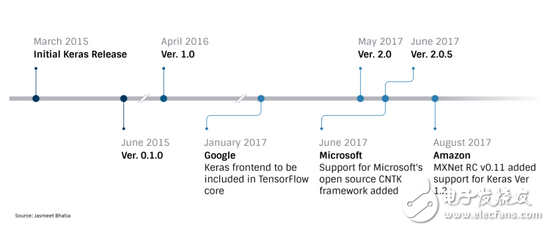

If we have questions about the popularity of Keras in data science and deep learning, then consider the support of all major cloud platforms and deep learning frameworks to discover its power. Currently, the official version of Keras already supports Google's TensorFlow, Microsoft's CNTK, and the University of Montreal's Theano. In addition, AWS announced last year that Keras will support Apache MXNet. Last month's release of MXNet 0.11 added Core ML and Keras v1.2. stand by. But so far MXNet seems to only support Keras v1.2.2 instead of the latest version 2.0.5.

Although we can use any of the back-end deployment models supported by Keras, developers and solution architects should understand Keras as a high-level API for deep learning libraries that essentially do not support all the basic parameter fine-tuning provided by each library. So if we want to fine-tune all of the parameters provided by the backend framework, then we'd better use the deep learning framework directly instead of using Keras. Of course, this situation will improve as various tools are added to Keras and the deep learning framework, but now Keras is still a very good tool, it can be well adapted to the early stages of deep learning development, and for data scientists And algorithmic engineers provide powerful tools for quickly building and testing complex deep learning models.

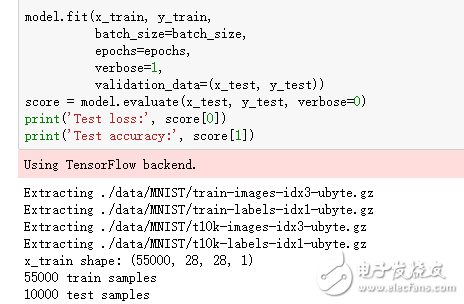

The heart of the machine also tried to test Keras using TensorFlow as the back end. We found that the whole model was very simple to build, so that even the beginner could easily understand the architecture of the entire network. Compared to using TensorFlow directly to build a convolutional neural network, it is much simpler to use Keras as a high-level API and use TensorFlow as a backend. Later we will upload the Keras implementation of CNN code and comments to the heart of the machine GitHub project. The following figure shows how we use TensorFlow as the backend initialization training:

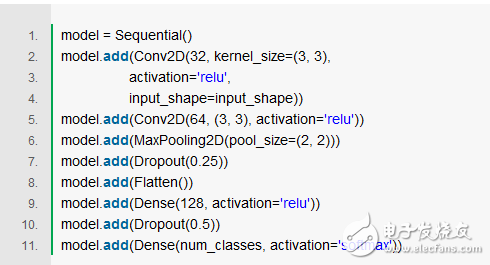

The following is the architecture of the entire convolutional network:

The above code clearly defines the hierarchy used by the entire network overlay. SequenTIal represents a sequential model, a linear stack of multiple network layers. After establishing the sequential model, we can add different levels from the input layer to build the entire network. The above architecture first uses the 2D convolutional layer Conv2D, the convolution kernel size is 3*3, and the activation function is ReLU, where the first parameter 32 represents the number of convolution kernels. In addition, the convolutional network also uses the largest pooling layer MaxPooling2D, pool_size=(2,2) is the downsampling factor in both directions (vertical, horizontal); the Dropout layer updates the parameters each time with a probability of 0.25 Randomly disconnect the input neurons; the Dense layer, the fully connected layer; and the Flatten layer, the input "flattening", that is, the multidimensional input is one-dimensional, commonly used from the convolutional layer to the fully connected layer transition. The above is the basic level of the architecture. For more detailed code and comments, check out the GitHub project at the heart of the machine.

Below is the specifics of the Jasmeet BhaTIa assessment.

Keras backend framework performance testing

Keras also enables developers to quickly test the relative performance of using different deep learning frameworks as Keras backends. There is a parameter in the Keras configuration file that determines which deep learning framework to use as the backend, so we can build an identical model to run directly on different deep learning frameworks (such as TensorFlow, CNTK, Theano). For MXNet, since we only support Keras ver1.2.2, we need to make a little modification to the code. Of course, this model can be fine-tuned to achieve better performance based on different libraries in each deep learning framework, but Keras still provides a good opportunity to compare performance between these basic libraries.

Earlier, some articles have compared the relative performance of the backend framework supported by Keras, but the comparison time is relatively early, and the comparison is mainly based on TensorFlow and Theano as the backend. So this article makes a comparison on a larger scale based on the latest version of Keras and the Deep Learning Framework.

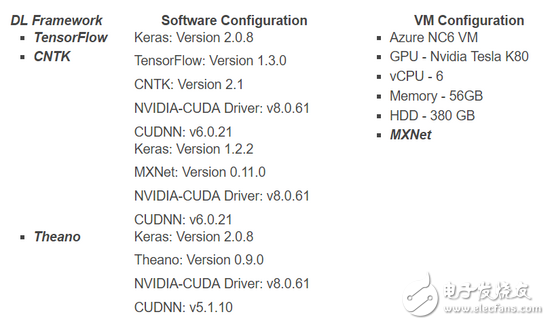

Let's first take a look at the configuration used for testing. All performance tests were performed on the Azure NC6 VM using the Nvidia Tesla K80 GPU, using a VM image of Azure DSVM (Data Science Virtual Machine) on Ubuntu. In addition to other data science tools, we also pre-installed Keras, TensorFlow, Theano and MXNet. For testing, all packages are the latest version, but since MXNet only supports Keras 1.2.2, it uses the old version.

ConfigurationBecause each deep learning framework has different dependencies, our tests run in the following three configurations:

To compare the different performance of the DL framework, we used five different test models as described below. To ensure that no specific framework gets any specific processing, all models are maintained from the GitHub Keras/examples repository.

Model source code address: https://github.com/fchollet/keras/tree/master/examples

The code for the test can be found in the author's GitHub project: https://github.com/jasmeetsb/deep-learning- keras-projects

Note: There are two tests for MXNet that are not involved, as MXNet does not support the latest version of Keras, and MXNet running the model as a backend requires a lot of code adjustment. In the other three tests, MXNet as the backend also required some minor adjustments, mainly because the new version of Keras renamed some functions.

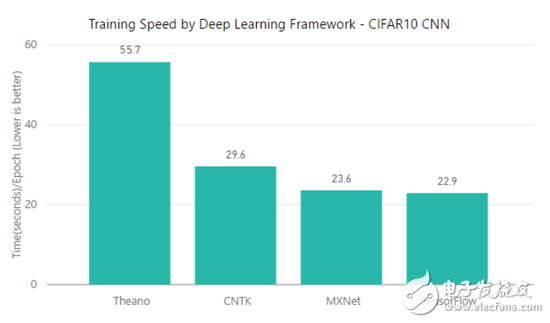

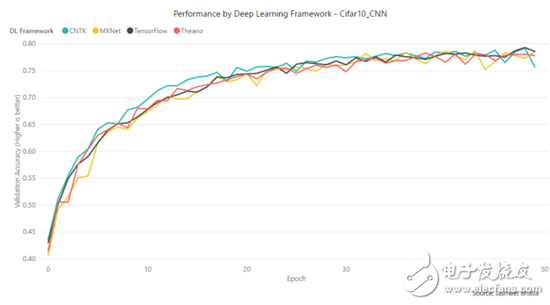

Test 1: CIFAR-10 & CNN

Type of learning model: Convolutional Neural Network (CNN)

Dataset/Task: CIFAR-10 Small Image Dataset

Goal: Classify images into 10 categories

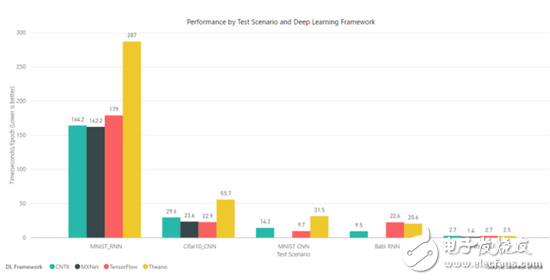

According to the training speed of each epoch, TensorFlow is a little faster than MXNet.

In terms of accuracy/convergence speed, CNTK leads a little in the first 25 epochs, and after 50 epochs, the other frames reach similar accuracy, while CNTK drops slightly.

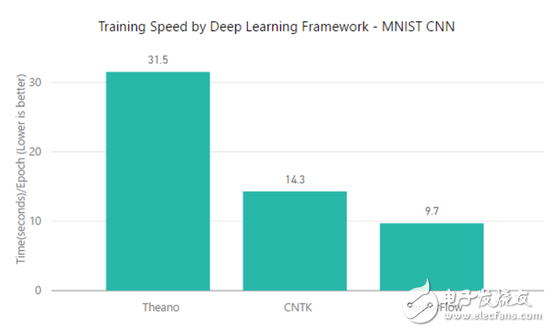

Test 2: MNIST & CNN

Type of learning model: CNN

Dataset/Task: MNIST Handwritten Digital Dataset

Goal: Classify images into 10 types of handwritten numbers

In this test, TensorFlow obviously has better training time, but all frames have similar characteristics in accuracy/convergence speed.

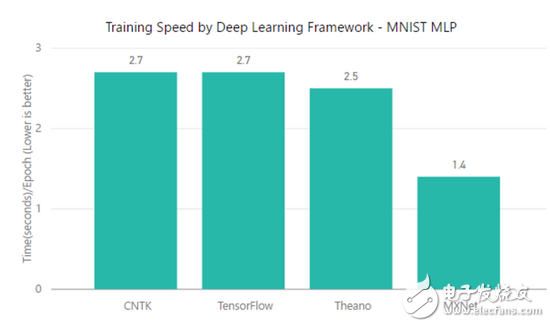

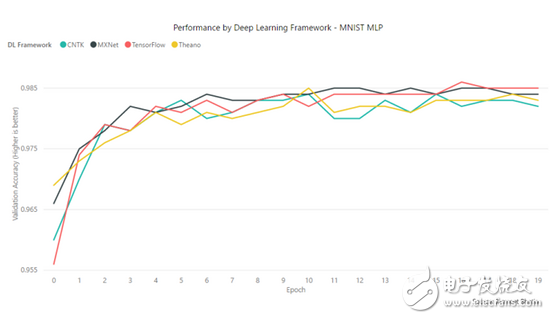

Test 3: MNIST&MLP

Type of learning model: multilayer perceptron / deep neural network

Dataset/Task: MNIST Handwritten Digital Dataset

Goal: Classify images into 10 types of handwritten numbers

In a standard neural network test using the MNIST data set, CNTK, TensorFlow, and Theano achieved similar scores (2.5 – 2.7 s/epoch), while MXNet only required 1.4s/epoch. In addition, MXNet also has a slight advantage in accuracy/convergence speed.

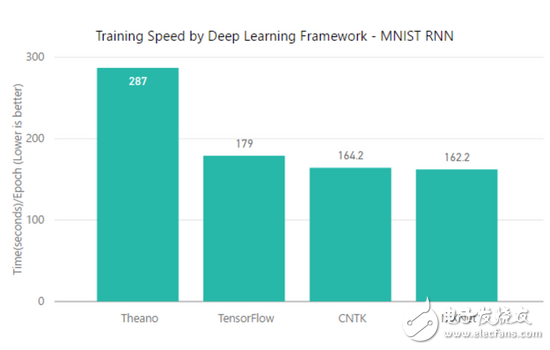

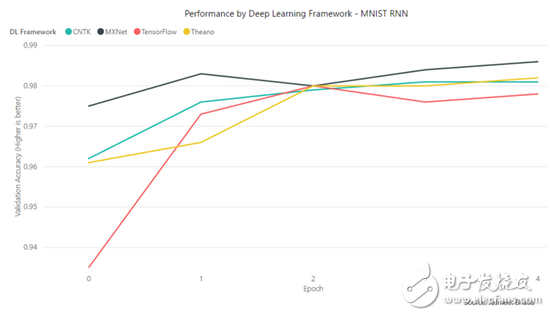

Test 4: MNIST&RNN

Type of learning model: hierarchical cyclic neural network (HRNN)

Dataset/Task: MNIST Handwritten Digital Dataset

Goal: Classify images into 10 types of handwritten numbers

In training time, CNTK and MXNet have similar performance (162 – 164 s/epoch), TensorFlow has 179s/epoch, and Theano's time is significantly increased.

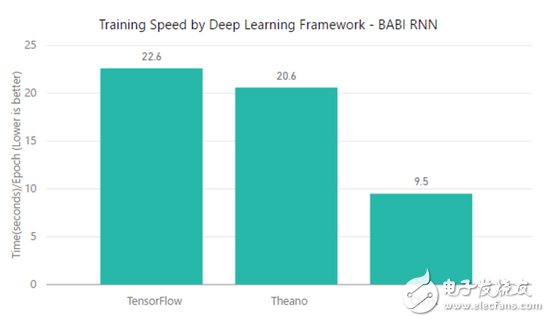

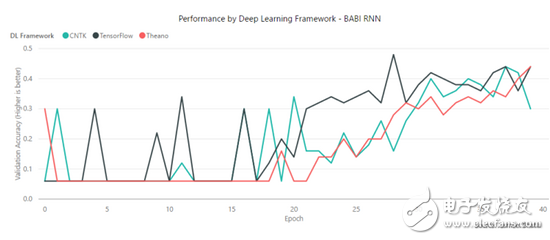

Test 5: BABI & RNN

Type of learning model: Recurrent Neural Network (RNN)

Dataset/Task: bAbi Project (https://research.fb.com/downloads/babi/)

Objective: Train two recurrent neural networks based on stories and questions, respectively, so that the merged vector can answer a series of bAbi tasks.

The test did not use MXNet, and TensorFlow and Theano were more than twice as fast on CNTs as CNTK.

TensorFlow performed best in CNN tests, but not very well in RNN tests.

CNTK is much better than TensorFlow and Theano in Babi RNN and MNIST RNN tests, but is worse than TensorFlow in CNN testing.

MXNet is better than CNTK and TensorFlow in RNN testing, and it performs better on MLP than all frameworks. However, MXNet does not support the Keras v2 function, so we can't test it directly without modifying the code, so there may be a slight deviation.

Theano is better than TensorFlow and CNTK in Deep Neural Networks (MLP).

ConclusionAs can be seen from the above results, all deep learning frameworks have their areas of expertise, and no framework must be better than others. CNTK can be used as a Keras backend and for RNN use cases. TensorFlow can be used for CNN, while MXNet shows great potential for performance, but still expects it to support all Keras functions. In the open source community, these frameworks are constantly expanding and enhancing to provide better performance and ease of deployment into the product. Performance is paramount when considering the use of these deep learning frameworks for production. In most cases, we also need to consider the ease of deployment and other ancillary tools that will help us manage the productized machine learning model. Finally, all of the framework performance is measured as a Keras backend, so there will be a bit of error, but this article will at least help you understand the performance of these frameworks.

Pipe Fittings,Cummins Flexible Hose,Cummins Genuine Flexible Hose,Bellows for Cummins Diesel Engine,Cummins Flexible Hose Replacement Parts

Chongqing LDJM Engine Parts Center , https://www.ckcummins.com